Enterprises are just as eager to avoid the risks of AI as they are to capture the value of AI. Over the last three years as we’ve seen “AI ethics” proliferate, we’ve seen some firms giving mere lip service to the term – and others walking on eggshells and avoiding anything AI as an inherently dangerous potential PR gaff.

The smartest firms, however, are finding the middle ground between these two extremes: A set of internal checks-and-balances to make sure they can (a) innovate quickly (always a challenge for large organizations), and also (b) stay within the boundaries of the law, and their own ethical standards.

When it comes to large organizations that need to move quickly, it’s near-impossible to come up with a better example than the U.S. Department of Defense. In 2021, the Defense Innovation Unit (DIU) – the innovation arm of the DoD – released their Responsible AI Guidelines, a set of frameworks designed to allow leaders to ask questions and guide the Planning, Development, and Deployment of AI – allowing them to have a strong chance of their intended result, without ethical missteps.

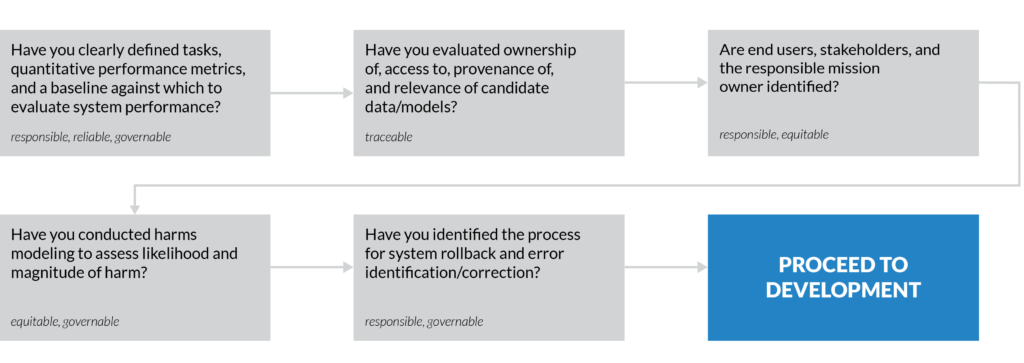

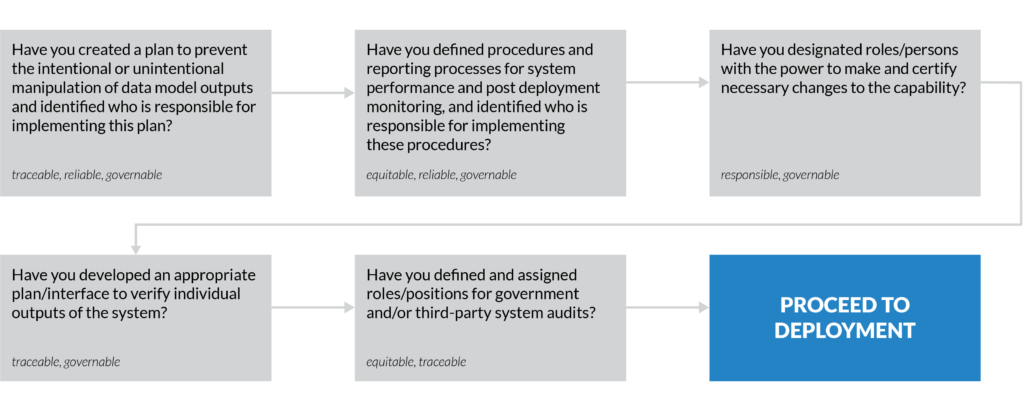

The guidelines create a kind of map of questions and if-then scenarios to validate assumptions and keep a project in the guard rails of it’s intended purpose.

In this short article we’ll highlight the key workflows listed in the RAI Guidelines, and provide some perspective (from experts at the DIU) about how business leaders might apply these frameworks within enterprise teams:

Phase 1: Planning

Phase 2: Development

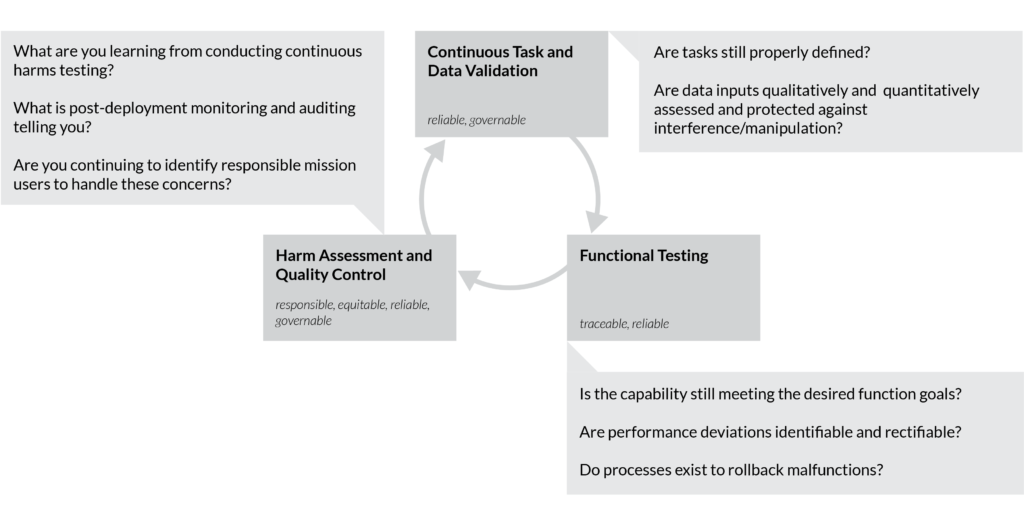

Phase 3: Deployment

(Images source: DIU. Workbooks for each of the RAI Guidelines can be found on the RAI Guidelines page linked above.)

(Images source: DIU. Workbooks for each of the RAI Guidelines can be found on the RAI Guidelines page linked above.)

We reached out to the DIU directly to ask them how these guidelines might be applied by executive business leaders. DIU AI lead Jared Dunnmon (who we interviewed last summer) and Carol Smith of the Carnegie Mellon University Software Engineering Institute wrote up the following for Emerj’s business audience:

“The level of complexity and effort to develop systems that include artificial intelligence (AI) is apparent but doing it responsibly can be daunting. Organizations doing this work need references to examples of how responsible AI considerations have been put into practice on real-world programs. The Defense Innovation Unit (DIU) has been working over the past year to operationalize the Department of Defense (DoD) Ethical Principles for Artificial Intelligence on DIU programs. While the Principles do not prescribe a methodology or offer concrete directions, they identify a clear need for practical implementation guidelines for the technology development and acquisition workforce.

The DIU released the initial Responsible AI Guidelines in November 2021 along with worksheets to support their implementation specifically due to their work leveraging AI from commercial companies, who wanted to better understand how to interact with the DoD Ethical Principles in their work.

“DIU’s RAI Guidelines provide a step-by-step framework for companies, DoD stakeholders, and program managers that can help to ensure that AI programs align with the DoD’s Ethical Principles for AI and that fairness, accountability and transparency are considered at each step in the development cycle of system,” said Dr. Jared Dunnmon, technical director of the AI/ML portfolio at DIU. The RAI Guidelines were drafted by members of DIU’s AI/ML Portfolio in collaboration with researchers at the Carnegie Mellon University Software Engineering Institute, and incorporate material, insight, and feedback from partners in government, industry, and academia.

DIU has been working to implement these ideas in several of its active prototype projects that leverage AI to operationalize the DoD AI Ethical Principles. The AI prototype projects cover applications including, but not limited to, predictive health, underwater autonomy, predictive maintenance, and supply chain analysis.

This work has accelerated programs by clarifying end goals and roles, aligning expectations, and acknowledging risks and trade-offs from the outset; increased confidence that AI systems are developed, tested, and vetted with highest standards of fairness, accountability, and transparency in mind; supported changes in the way AI technologies are evaluated, selected, prototyped and adopted; helped avoid potential bad outcomes; and provoked and surfaced questions which have spurred conversations that are crucial for AI project success.”

Readers with an interest in more DoD perspectives on AI adoption may be interested in our interview with DIU Director Michael Brown, titled: The US-China Race for AI Predominance, and the Future of AI Military Innovation.